How AI Is Changing Marketing Compliance Teams: Risks and Real‑World Violations

AI is now embedded in everyday marketing. Copy is drafted by LLMs, social posts are scheduled by agents, and chatbots are answering customer questions at all hours. Adoption is no longer the story. Governance is. Since early 2025, 71% of organizations reported regular use of generative AI, up from 65 percent in early 2024.

In this article:

- What AI has changed about the work of marketing compliance

- The top AI‑driven risk patterns to watch

- Recent enforcement and court actions you can learn from

- A practical playbook for a defensible AI marketing compliance program

- Where automation fits into your review and monitoring stack

Quick stat: In Q1 2024, 1 in 5 of marketing assets reviewed by PerformLine were flagged for potential compliance issues across channels, underscoring the need for scaled monitoring as AI accelerates content volume.

What AI has changed about the work

Speed and surface area

GenAI increases the number of messages, offers, and variations that hit the market. That accelerates review cycles and expands the set of channels that must be monitored continuously.

New “authors” and accountability

Chatbots, agents, and partners can now publish or personalize copy at the edge. Your organization is still on the hook for those representations. Courts have already treated chatbot statements as the company’s own.

Shifting decision rights

AI is moving from point tools to agents. Gartner expects half of business decisions to be augmented or automated by AI agents, which will change who originates claims and how they’re approved. Compliance must be upstream, not only a gate at the end.

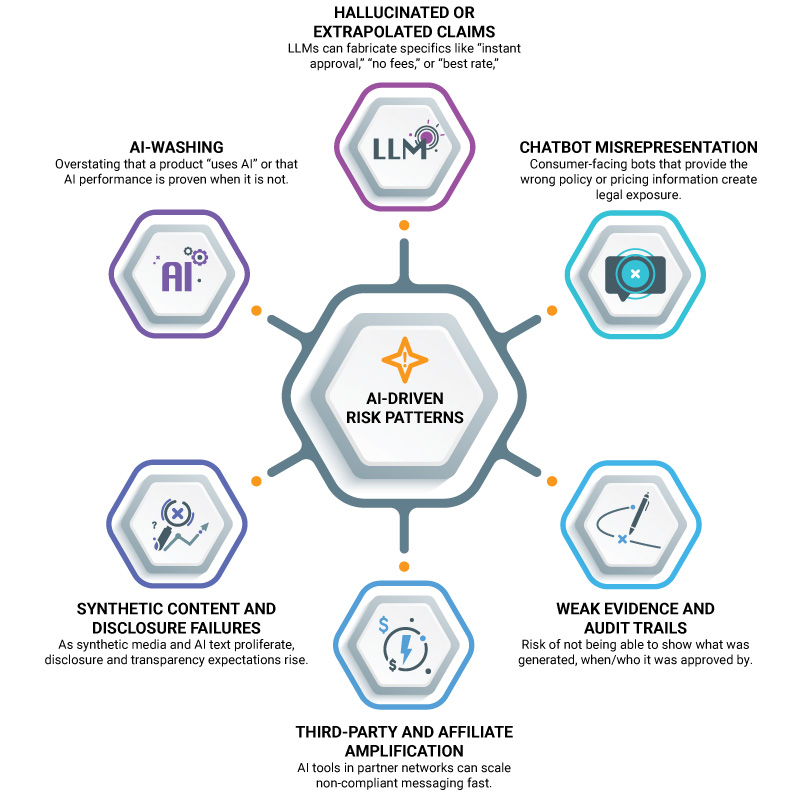

The AI‑driven risk patterns to watch

- AI‑washing

Overstating that a product “uses AI” or that AI performance is proven when it is not. The SEC has already penalized firms for false and misleading statements about AI capabilities. - Hallucinated or extrapolated claims

LLMs can fabricate specifics like “instant approval,” “no fees,” or “best rate,” which map directly to UDAAP and advertising violations if not substantiated. PerformLine data shows “offer inflation” and similar language are frequent flags in live assets. - Chatbot misrepresentation

Consumer‑facing bots that provide the wrong policy or pricing information create legal exposure. A 2024 tribunal held a company liable for a chatbot’s inaccurate fare information despite website fine print. - Synthetic content and disclosure failures

As synthetic media and AI text proliferate, disclosure and transparency expectations rise. The EU AI Act includes transparency obligations for deployers and content labeling duties, which will influence global best practices. - Third‑party and affiliate amplification

AI tools in partner networks can scale non‑compliant messaging fast. You are accountable for partner claims and must prove ongoing oversight. - Weak evidence and audit trails

If your team cannot show what was generated, when it was approved, and by whom, it is hard to rebut an enforcement narrative later.

Real‑world violations and actions to learn from

SEC “AI‑washing” penalties

In March 2024, the SEC announced settled charges against two investment advisers for false and misleading AI statements, totaling $400,000 in penalties. The message was simple. If you use AI in your marketing story, you need evidence.

FTC’s Operation AI Comply

In September 2024, the FTC launched a sweep against deceptive AI claims and tools used to deceive consumers, including actions involving automated legal services and AI writing tools used to generate fake reviews. The agency has also warned businesses to “keep your AI claims in check.”

Automators AI settlement

In February 2024, the FTC secured a settlement and lifetime bans for operators behind an “AI‑powered” e‑commerce money‑making scheme that made unfounded earnings claims. AI hype does not excuse substantiation.

Chatbots in banking under scrutiny

The CFPB’s 2024 issue spotlight flagged risks from bank chatbots, including consumer confusion, complaint volume, and legal exposure when bots give inaccurate or incomplete information.

Case law on chatbot liability

In Moffatt v. Air Canada (2024), the tribunal found the airline liable for negligent misrepresentation based on its website chatbot’s statements and awarded compensation. Disclaimers did not shift responsibility.

A defensible playbook for AI in marketing compliance

- Build and maintain a claim inventory

Catalog every factual, comparative, pricing, and performance claim your brand makes, including AI‑related assertions. Tag each with required substantiation and the owners who can approve changes. - Create an AI‑specific negative claim library

List prohibited phrases tied to UDAAP and advertising risk, like “guaranteed,” “instant approval,” “lowest rate,” “no fees,” and “first AI [category]” unless strictly true and qualified. Deploy these as rules across your review and monitoring stack. - Gate LLM outputs with human‑in‑the‑loop review

Treat LLMs like a prolific junior copywriter. Require human review for factual claims, rates, fees, and legal qualifiers. Capture the prompt, model, temperature, and output snapshot for audit. - Govern chatbots as publishing channels

Limit bots to approved knowledge, enforce escalation to humans for pricing, eligibility, or legal topics, show last‑updated dates, and log conversations. The CFPB and case law have made clear that inaccurate bot guidance is your responsibility. - Monitor every channel continuously

Do not rely on pre‑flight review alone. As assets change post‑approval, ongoing monitoring catches edits and partner deviations quickly. In Q1 2024, PerformLine monitored 5.7M assets and flagged 1.1M for review, a reminder of the scale required. - Substantiate AI claims or do not make them

If you claim “AI‑powered,” say what that means and provide support. The FTC’s guidance is explicit that AI claims must be truthful, non‑misleading, and evidence‑based. - Prepare an incident‑response routine for content

When something slips through, preserve logs, pull the asset across channels, publish a correction if needed, and document the remediation.

What this means for resourcing

Even with small teams, the work is manageable if you automate the repetitive parts, centralize evidence, and focus humans where judgment is required. AI will not replace reviewers any time soon. It will raise expectations for speed and scale. Plan accordingly.

Gartner sees a near‑term future where AI agents augment or automate half of business decisions. If your controls are not built into those decision points, you will be chasing violations after the fact.

How PerformLine helps

- Pre-Publication Review to accelerate pre‑publication compliance approvals and capture a full audit trail.

- Omni‑Channel Monitoring across the web, social, email, calls, and messages to catch changes and partner deviations fast.

- Proprietary Rulebooks to flag AI‑washing phrases, inflated offers, instant approval language, and missing disclosures at scale.

- Discovery to surface unknown brand placements and affiliate content that mention your offers.

Our clients use PerformLine to cut manual review time, scale oversight, and reduce the likelihood that AI‑generated content creates a regulatory problem later.

FAQs

Using “AI” in claims without accurate, substantiated detail, or implying capabilities you do not have. The SEC has already penalized firms for this.

No. Tribunals have held companies liable for chatbot misstatements. Treat bot outputs as your own and govern them accordingly.

Yes. While there’s no single federal rule yet, the FTC and several states (like California and Texas) expect clear labeling of AI-generated or synthetic content to avoid misleading consumers. Transparency is the safest compliance approach.

Use a human‑in‑the‑loop gate, apply a negative claim library, check substantiation, and log prompts and outputs for audit. This reduces risk from hallucinated or exaggerated claims.

Claims must be truthful, not misleading, and supported by evidence. The FTC has an active enforcement sweep targeting deceptive AI claims and tools.

No. This article provides general information for compliance professionals.

Want to see how teams automate AI marketing compliance without sacrificing speed?

Request a demo and we will show examples across web, social, email, and partner channels.